Training vision models for edge deployment often require handling variations in appearance such as surface properties, significant changes in lighting and environmental conditions, and the limited availability of defect or anomaly examples in real-world scenarios. These challenges can lead to models that perform well in controlled settings but struggle when deployed on devices operating in dynamic environments. The examples included throughout this blog post demonstrate these challenges in practice and highlight how thoughtful data preparation and synthetic data can help solve these challenges.

Variations in Product Appearance

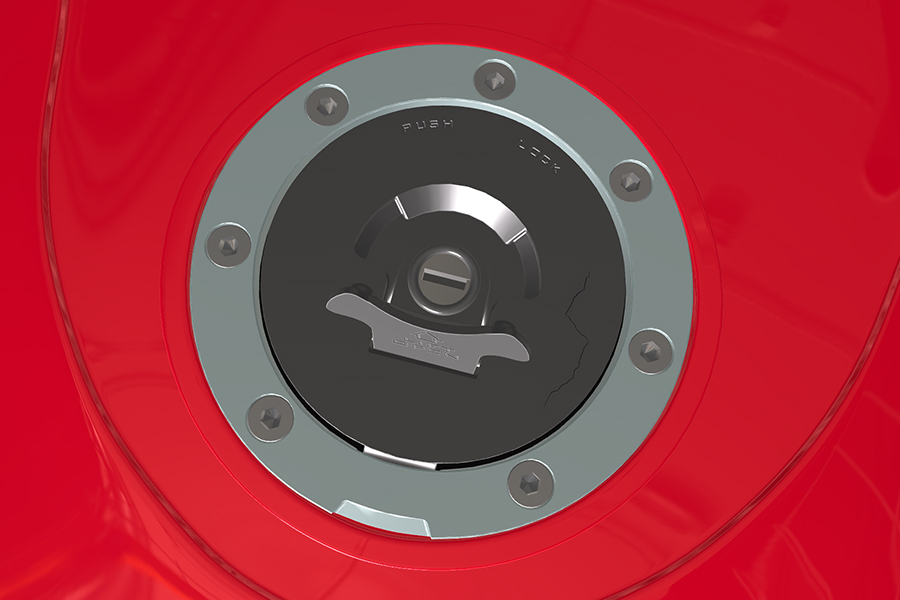

One major challenge is ensuring the model can handle variations in the appearance of the target objects. For instance, consider a computer vision model inspecting a motor component. The same motor part might come in different paint colors or materials, or have various logos and markings. If the training dataset doesn’t represent these variations, the model could fail to recognize the part when a new color or slight design variation appears in the field. A robust model needs to learn the essential features of the part regardless of color or minor cosmetic differences.

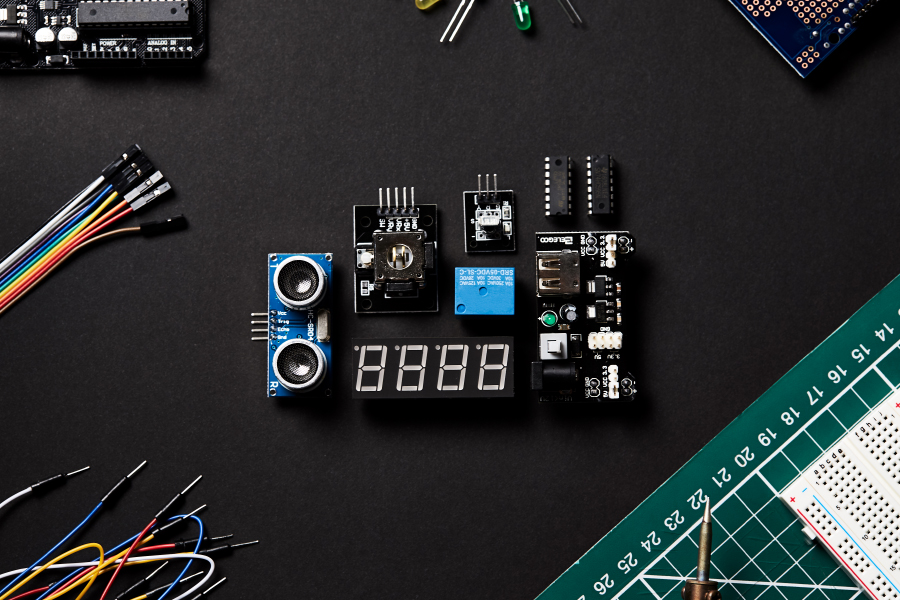

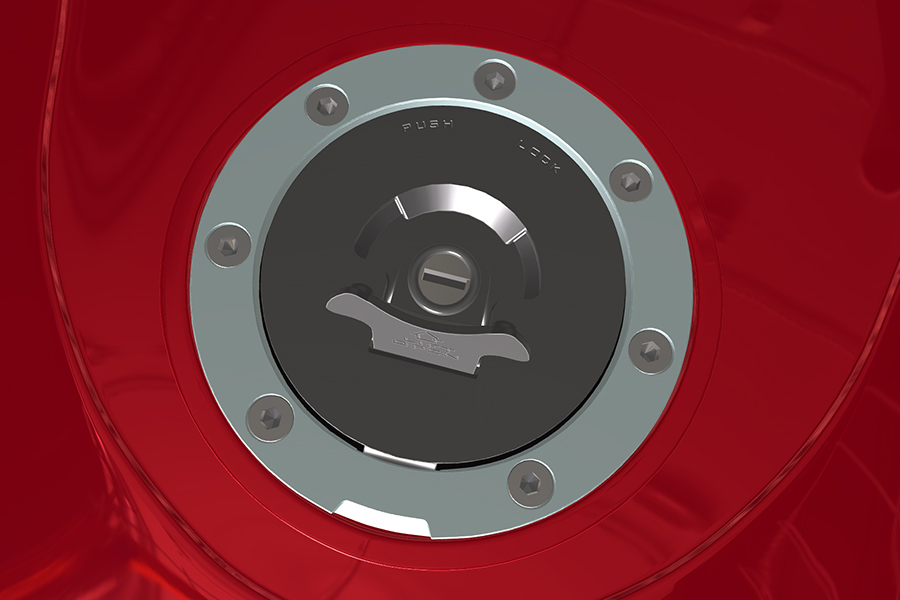

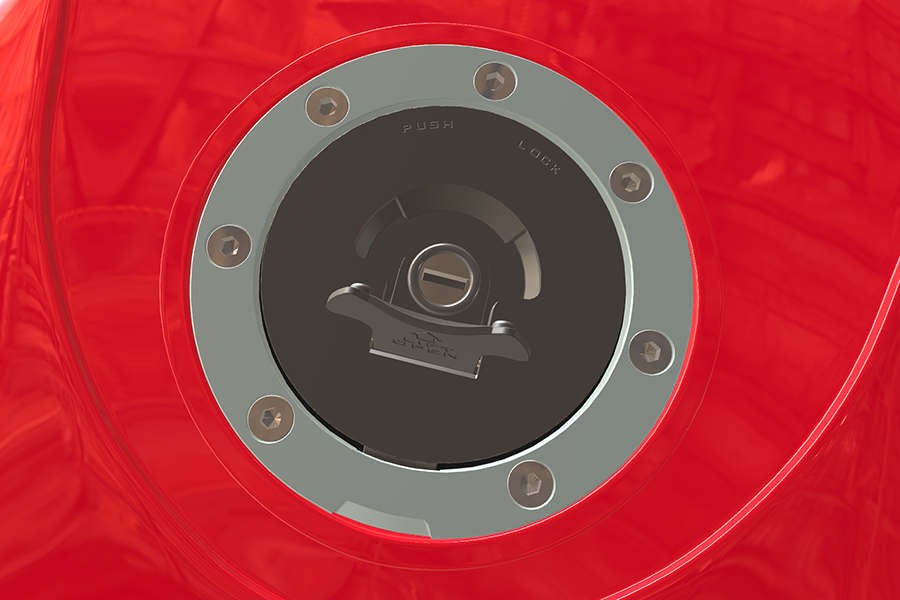

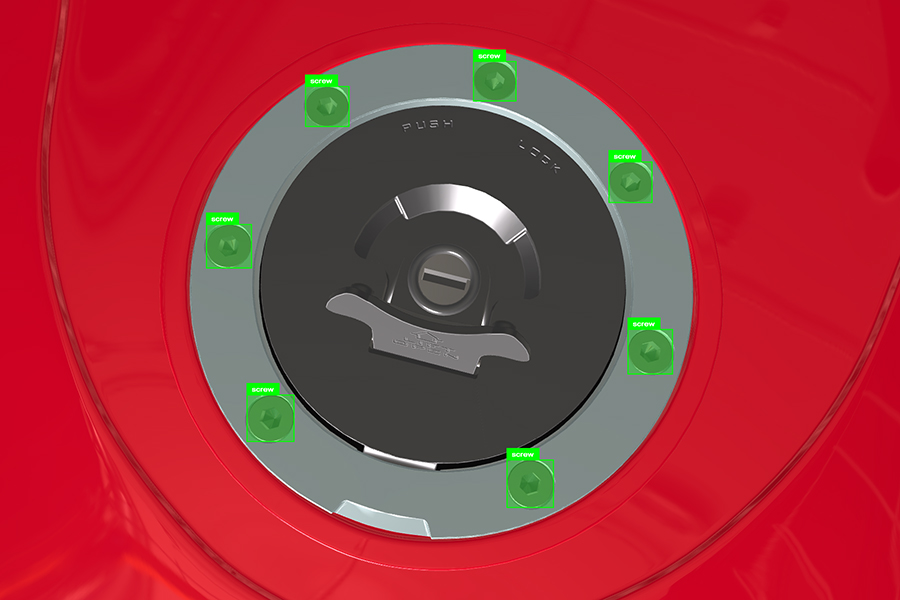

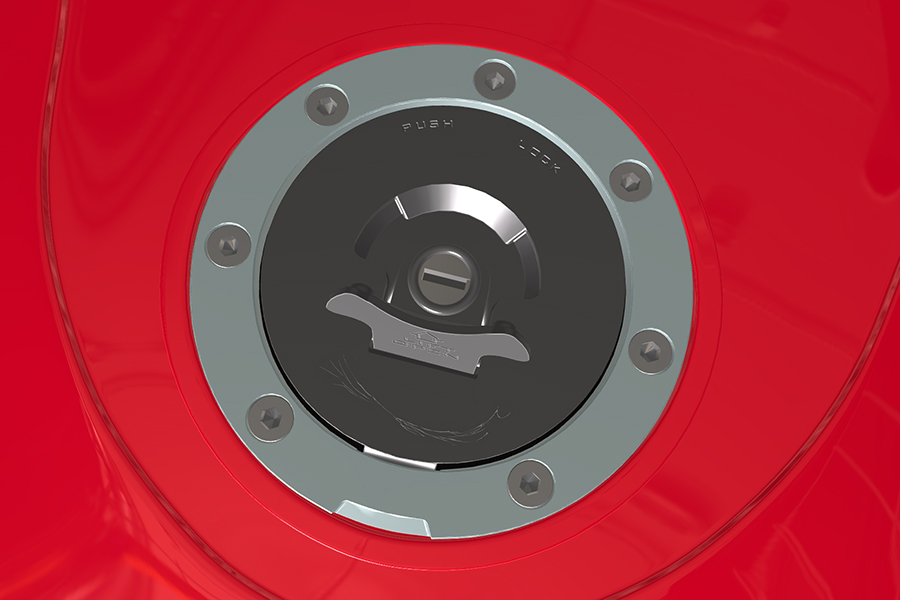

Gathering real images of every color variant or version can be difficult and time-consuming. This is where data augmentation and synthetic data become valuable. Simple augmentation techniques (like color shifting or filtering existing images) can help create some variability from limited data. Synthetic data generation goes a step further by rendering 3D models of the part in different colors or finishes, producing new images that mimic real variations without needing to physically photograph each one. By expanding the dataset to include these color and material differences, we prevent the model from overfitting to a single appearance. In fact, a diverse dataset with variations (in color, texture, etc.) is critical for models to generalize well and avoid surprises in production.

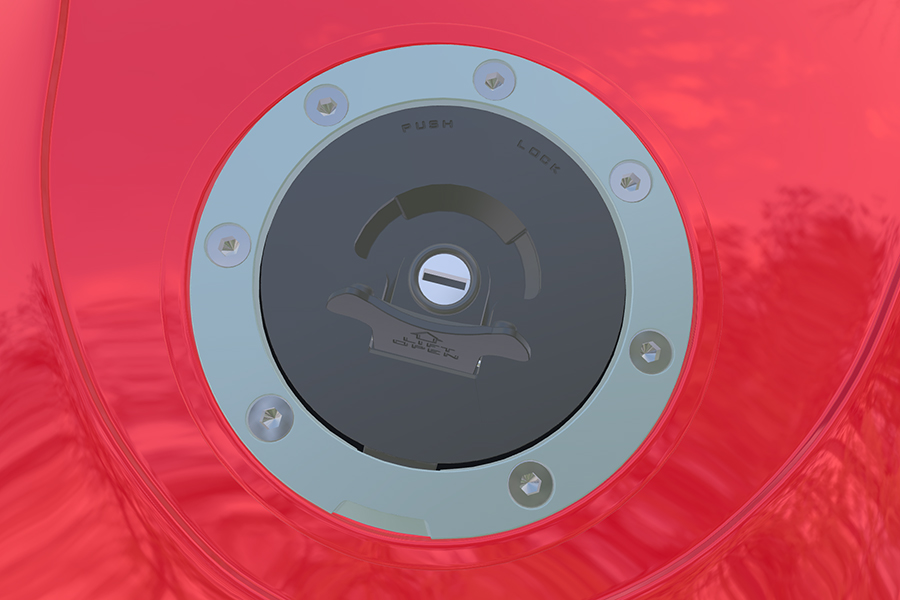

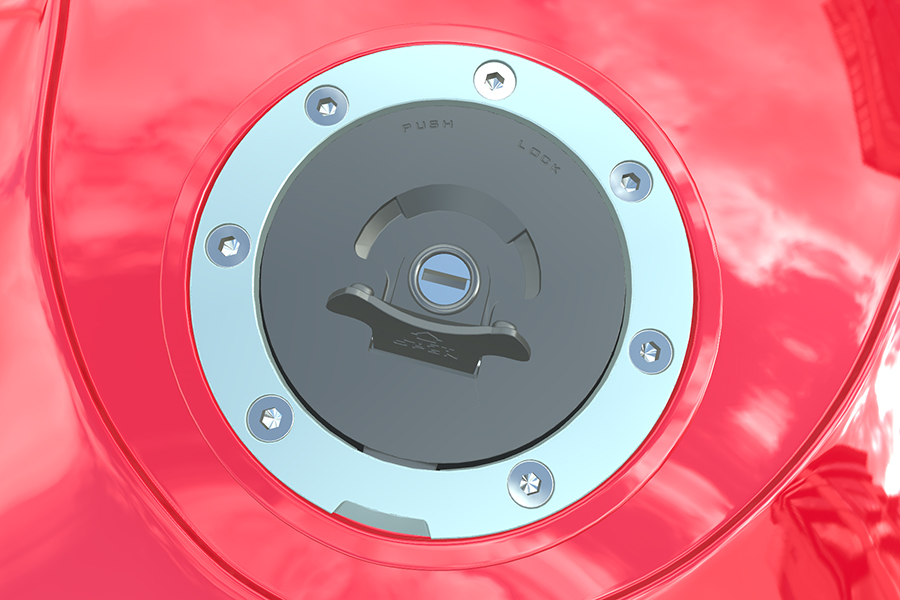

Synthetic image

Synthetic image

Lighting and Environmental Variations

Edge devices often operate in uncontrolled or changing environments, so another key challenge is variation in lighting and background conditions. Lighting changes can significantly affect how an image appears. A part might be clearly visible under bright, diffuse light but appear shadowy or washed out under poor lighting. Images captured in a factory during the daytime versus at night, or on a sunny line versus a dimly lit station, can differ greatly in brightness, contrast, and color tone. Any fluctuation in light intensity or color temperature directly influences the level of detail, contrast, and color accuracy in images, which means a model trained only on one lighting condition may struggle when deployed under another. Background changes, such as different machinery behind the object or cluttered versus clean scenes, can also confuse a model if they are not represented in the training data. Edge deployment environments may even vary between device locations, and it is common for models trained in one setting to fail in another due to differences in lighting and scene geometry.

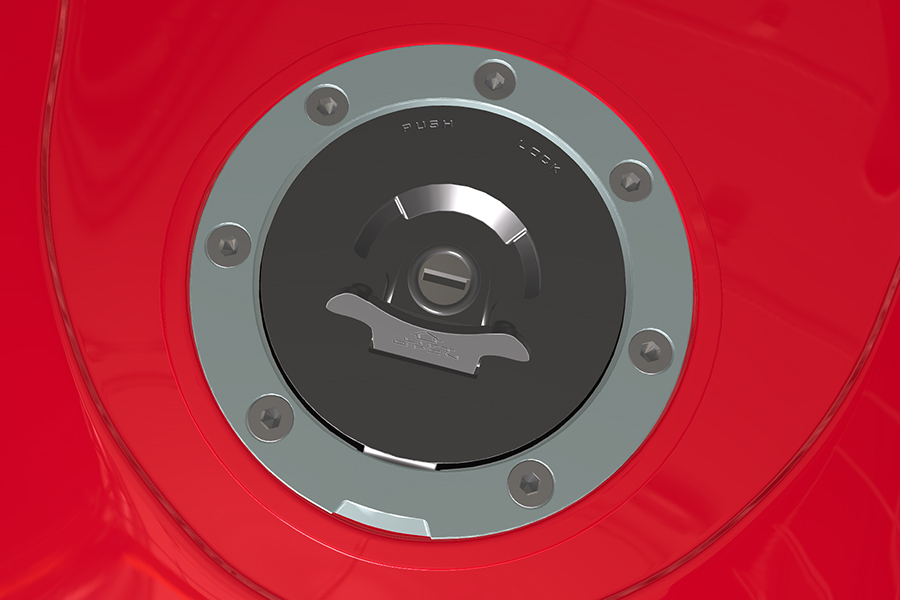

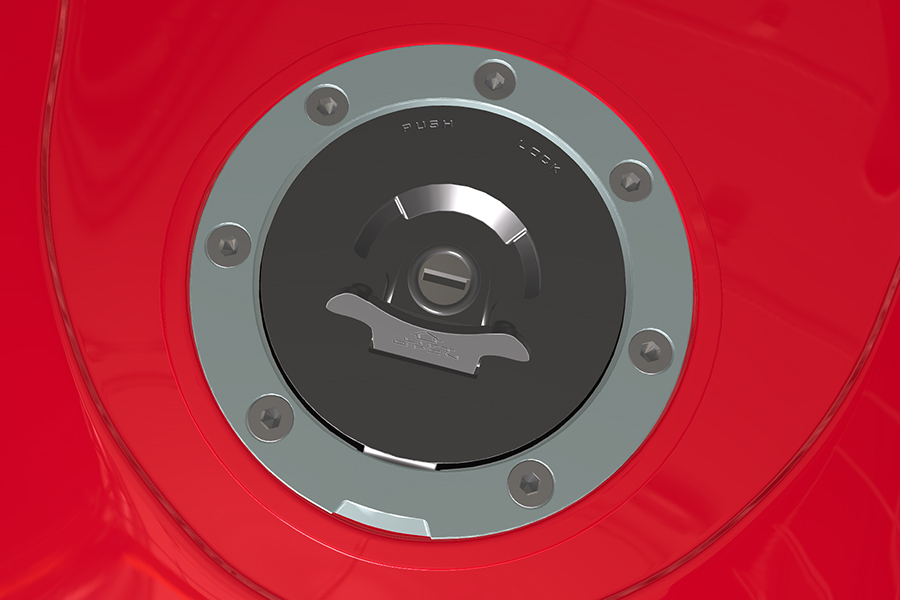

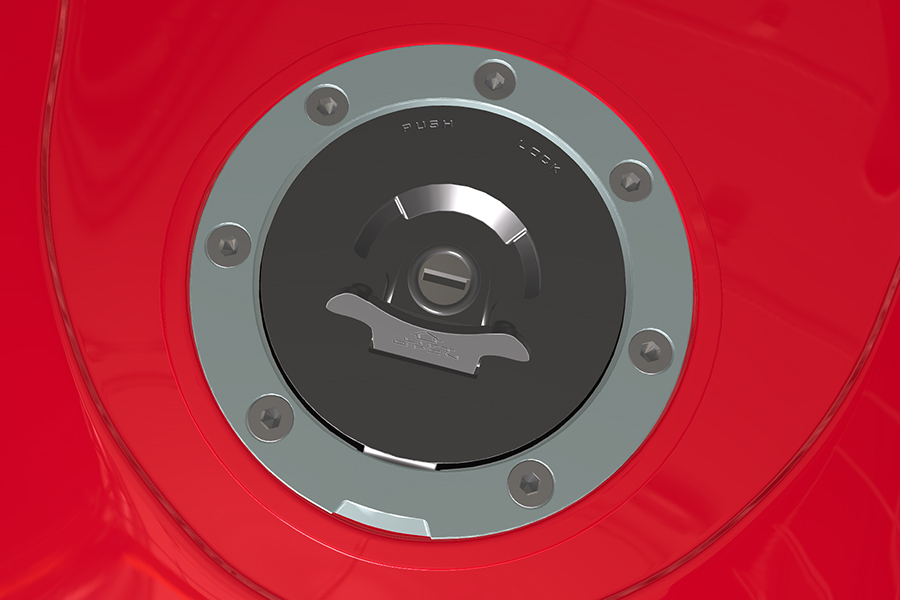

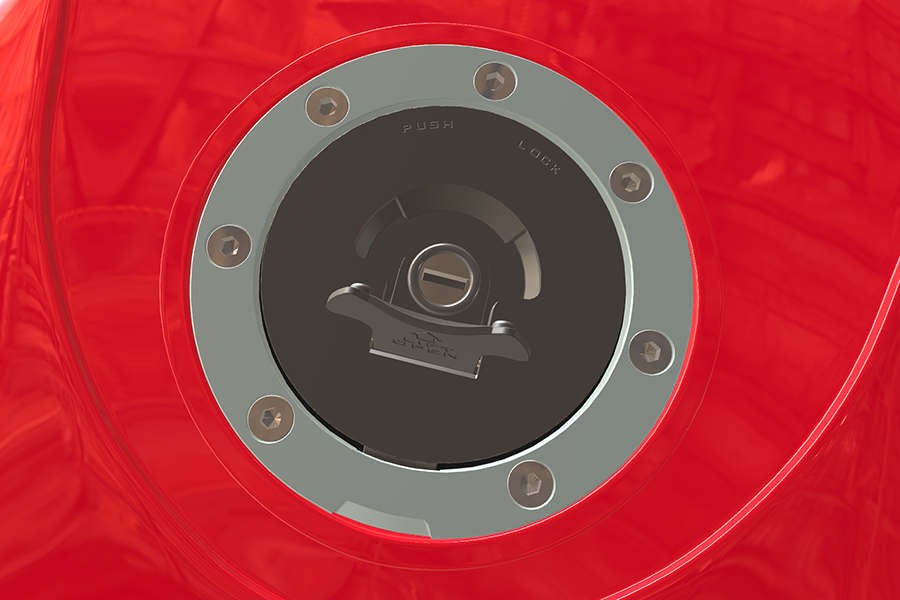

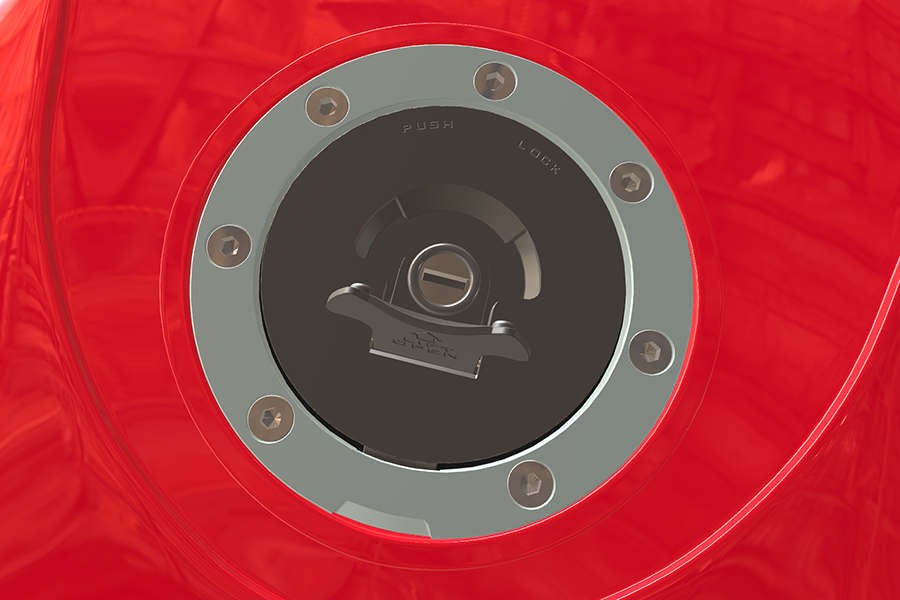

To address this, a training dataset needs to cover a broad range of environmental conditions. Synthetic data offers an effective way to achieve this. The same scene can be simulated under different lighting, from bright overhead lights to dim, angled lighting with shadows, and the appearance of the object can be observed across these variations. Backgrounds and camera angles can also be systematically adjusted. This controlled diversity in training images helps the model become more robust to these factors. Including varied lighting scenarios and viewpoints helps the model avoid failing when it encounters unfamiliar conditions in the real world. By training on this broader set of conditions, the edge AI model learns to focus on the object itself rather than the particular lighting or background present in the image.

Synthetic image with variation in reflections (indoor)

Synthetic image with variation in reflections (indoor)

Ensuring Perfect and Consistent Annotations

High-quality data annotation is the backbone of any supervised computer vision model. For edge AI models, this is especially critical: lightweight models have limited capacity and cannot easily absorb noisy or inconsistent labels. Any errors or ambiguities in the training labels can directly degrade a small model’s performance, since it doesn’t have the luxury of millions of parameters to smooth over mislabeled examples. One major challenge is that creating perfect annotations on real images is labor-intensive and prone to human error. In manufacturing or industrial settings, images may need detailed labeling (e.g., bounding boxes or segmentation for tiny components or defects), and maintaining consistency across thousands of images is difficult. The quality of labeling directly impacts system performance; inconsistent annotation (for example, one annotator labels an edge of the part as “defect” while another does not) can confuse the model. Moreover, manual labeling is time-consuming; it can become a bottleneck when you need to expand or update the dataset.

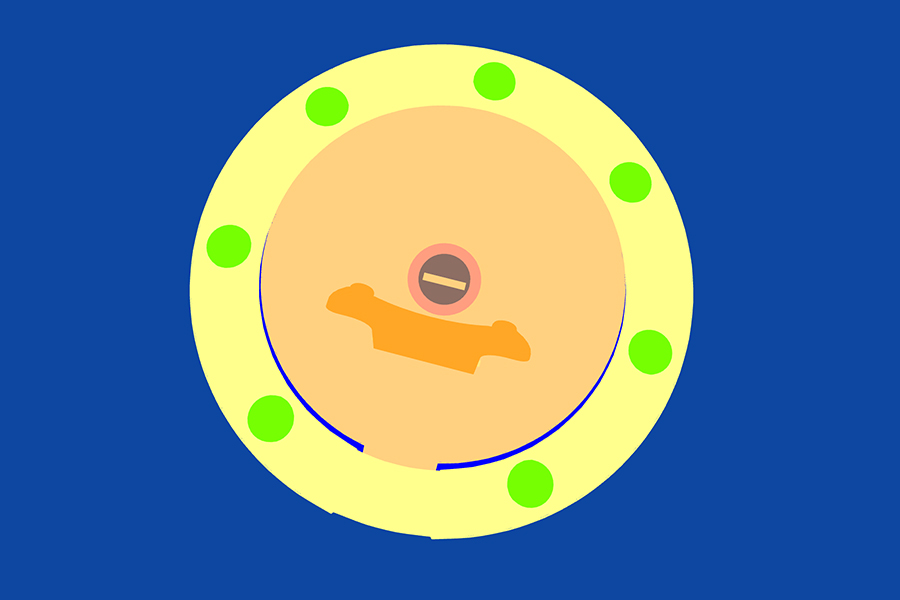

The challenge, therefore, is obtaining fully accurate, consistent labels at scale. This is an area where synthetic data offers a huge advantage: because synthetic images are generated from a 3D environment, ground-truth annotations come “for free” and with perfect accuracy.

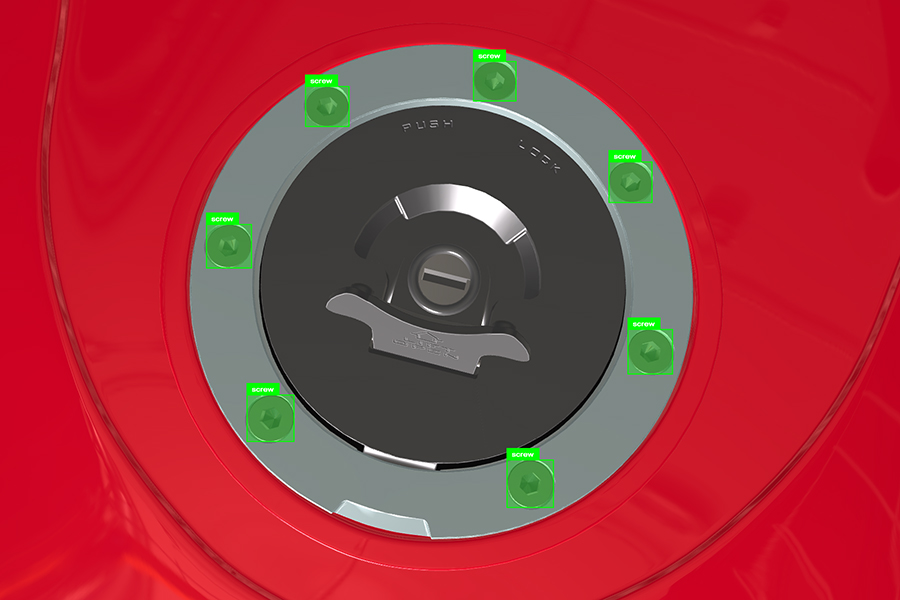

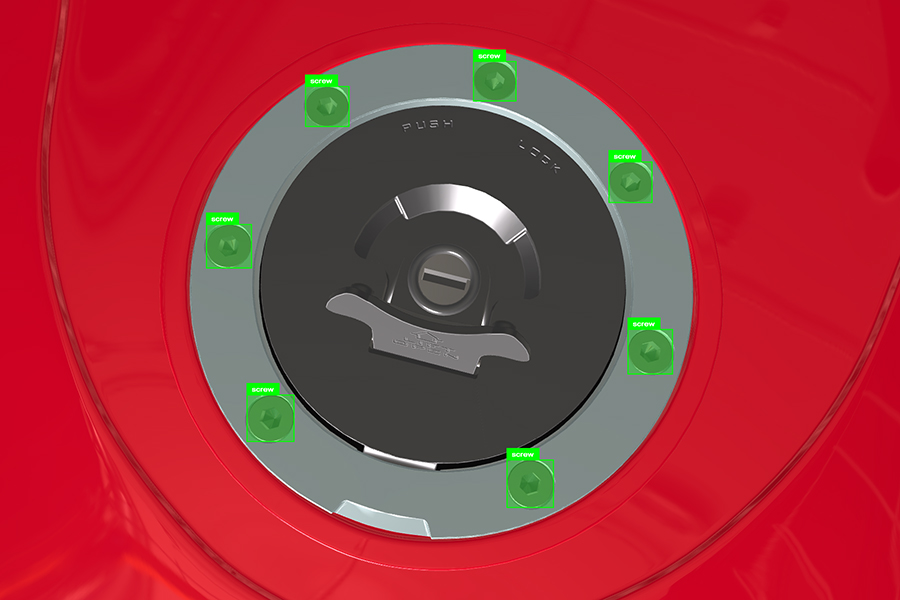

Annotations

Annotations

Rare Defects and Edge Case Scenarios

In many edge AI use cases, especially in quality inspection or safety monitoring, the most critical events are also the rarest. A visual inspection model, for instance, must detect defects or anomalies in products, but in a high-yield manufacturing line, true defects might occur in only a tiny fraction of items. Training a model to recognize an event that hardly ever happens is a paradox: you need examples of these events, but by definition they are scarce. This data scarcity of rare cases is a formidable challenge. A team might only collect a handful of real defect images over months of production, which is typically not enough for a deep learning model to learn what a defect looks like.

Synthetic data generation is emerging as a game-changer for this problem. Instead of waiting and hoping to capture enough examples of each defect or edge case, we can create realistic defect examples on demand. Using generative techniques, various defect types (from small scratches or dents to missing components) can be generated, and these anomalies seamlessly blend into the scene. This approach has two benefits: it yields quantity (we can generate hundreds of defect images, far exceeding the 3–5 rare examples one might manually collect) and control (we can ensure a range of defect severities, locations, and types are included). Engineers can specify exactly the kind of rare events to simulate, whether it's a tiny crack in a gear or an incorrect assembly alignment. The value of this controlled synthetic defect data is that the model learns not just one example of a defect, but the family of ways a defect can manifest.

Synthetic image with rust defect

Synthetic image with rust defect

Overcoming Data Challenges with Synthetic Data

Preparing high quality training data is one of the most important steps in developing reliable computer vision models for edge devices. Variations in appearance, lighting, annotation quality and the availability of defect examples all influence how well a model performs once deployed. By combining careful data preparation with synthetic data, it becomes possible to cover a wider range of real-world conditions and create more complete datasets, even when real samples are limited. This approach helps edge models generalize more effectively and reduces the risk of performance issues in production. If you would like to discuss how these methods can support your use case, feel free to contact us.